Over the past few years, we have witnessed a series of dramatic changes in data, including how data is generated, processed, and further utilized to capture additional value and intelligence that are based on deep learning and neural network applications. The impact of emerging computing models. This profound change begins in the data center, which uses deep learning techniques to provide insight into massive amounts of data, primarily for categorizing or identifying images, supporting natural language processing or speech processing, or understanding, generating, or successfully learning how to play complex strategy game. This change has spawned a number of high-performance computing devices (based on GP-GPUs and FPGAs) designed specifically for these types of problems, and later produced fully customizable ASICs that further accelerate and improve deep learning-based systems. Calculate ability.

Big data and fast data

Big data applications use specialized GP-GPUs, FPGAs, and ASIC processors to analyze large data sets through deep learning techniques and reveal trends, patterns, and correlations for image recognition, speech recognition, and more. Therefore, big data is based on past information or static data resident in the cloud. A common function of big data analysis is to perform a specific task of "trained" neural networks, such as identifying and marking all faces in an image or video sequence. Speech recognition also demonstrates the power of neural networks.

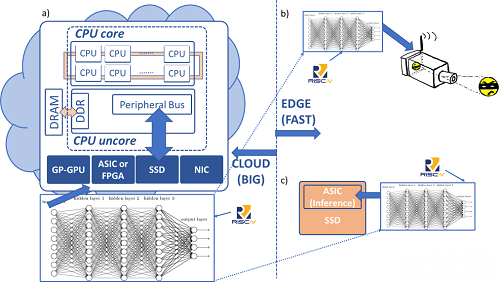

This task* is performed by a specialized engine (or inference engine) that resides directly on the edge device and is guided by a fast data application (Figure 1). By processing locally captured data on edge devices, fast data can leverage real-time decisions and results from big data algorithms. Big data provides insights (predictive analytics) from “what happened in the past†to “what might happen in the future,†while fast data provides real-time actions that improve business decisions, operations, and reduce inefficiencies. So this will definitely affect the final result. These methods can be applied to a variety of edge and storage devices such as cameras, smartphones, and solid state drives.

Calculate on the data

The new workload is based on two scenarios: (1) training a large neural network for a particular workload (such as image or speech recognition); and (2) applying a trained (or "suitable") neural network on the edge device. Both workloads require massively parallel data processing, including multiplication and convolution of large matrices. The most *implementation of these computing functions requires vector instructions that run on large vectors or data arrays. RISC-V is an architecture and ecosystem that is well-suited for this type of application because it provides a standardized set of processes supported by open source software that gives developers complete freedom to adopt, modify, and even add proprietary vector instructions. Some obvious RISC-V computing architecture opportunities are outlined in Figure 1.

Mobile data

The emergence of fast data and edge computing has the practical consequence that moving all data back and forth between the clouds for computational analysis is not an efficient one. First, when transmitting over long distances in mobile networks and Ethernet, it involves relatively large data delay transmissions, which is not ideal for image recognition or speech recognition applications that must operate in real time. Second, computing on edge devices requires a more scalable architecture where image and speech processing or in-memory computing operations on SSDs can be performed in a scalable manner. In this way, each new edge device brings the incremental computing power required, and optimizing the data movement mode and time is a key factor in the scalability of this architecture.

Figure 1: Big Data, Fast Data, and RISC-V Opportunities

In Figure 1a, the cloud data center server performs the functions of machine learning using a deep learning neural network trained on large large data sets. In Figure 1b, the security camera in the edge device uses a big data trained inference engine to identify images (fast data) in real time. In Figure 1c, the intelligent SSD device uses an inference engine for data identification and classification, thereby effectively utilizing the bandwidth of the device. Figure 1 shows the potential opportunities for the RISC-V core, which is free to add proprietary and future standardized vector instructions that are quite effective for processing deep learning and reasoning techniques.

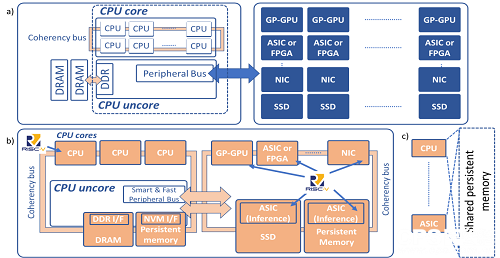

Another similar and important trend is the way data is moved and accessed on the big data side and in the cloud (Figure 2). The traditional computer architecture (Fig. 2a) uses a slow peripheral bus that is connected to many other devices (eg, dedicated machine learning accelerators, graphics cards, high speed solid state drives, intelligent network controllers, etc.). Low-speed bus can affect device utilization because it limits the communication between the bus itself, the main CPU, and the main potentially persistent memory. It is also not possible for these new computing devices to share memory between them or with the host CPU, resulting in futile and restricted data movement on the slow bus.

There are several important industry trends regarding how to improve data movement between different computing devices, such as CPUs and computers and network accelerators, and how to access data in memory or fast storage. These new trends focus on open standardization, providing faster, lower latency serial structures and smarter logic protocols for consistent access to shared memory.

A new generation of data-centric computing

Future architectures will need to deploy open interfaces to connect to persistent memory and fast buses that access compute accelerators and support cache coherency (such as TileLink, RapidIO®, OpenCAPITM, and Gen-Z) to dramatically improve performance. It also allows all devices to share memory and reduce unnecessary data movement.

Figure 2: Data movement and access in the computing architecture

In Figure 2a, the traditional computing architecture has reached its limits by using a slow peripheral bus for fast memory and computational acceleration devices. In Figure 2b, the future computing architecture uses an open interface that provides uniform and cache-consistent access to all computing resources on the platform to access shared persistent memory (this is called a data-centric system). structure). In Figure 2c, the deployed device is able to use the same shared memory, thereby reducing unnecessary data replication.

The role of the CPU peripheral core and network interface controllers will be a key factor in supporting data movement. The CPU peripheral core components must support key memory and permanent memory interfaces (such as NVDIMM-P), as well as memory that resides near the CPU. There is also a need to implement intelligent fast buses for computational accelerators, intelligent networks, and remote persistent memory. Any device on this bus (such as a CPU, general purpose or dedicated compute accelerator, network adapter, memory, or memory) can contain its own computing resources and have the ability to access shared memory (Figure 2b and Figure 2c).

RISC-V technology is a key driver for optimizing data movement because it can execute vector instructions on new computational accelerator devices for new machine learning workloads. It implements a variety of open source CPU technologies that support open memory and intelligent bus interfaces; and implements a data-centric architecture with consistent shared memory.

Solve challenges with RISC-V

Big data and fast data pose challenges for future data movement and paves the way for the RISC-V Instruction Set Architecture (ISA). This open, modular approach to architecture is well suited as the foundation for a data-centric computing architecture. It provides the following features:

· Extended edge computing device computing resources

• Adding new instructions, such as instructions for vector machine learning workload

· Find very close to memory and memory medium, small computing kernel

· Support new computing paradigms and modular chip design methods

Support for new data-centric architecture, where all processing units can access the shared memory lasting through a consistent manner, to optimize data movement

RISC-V is developed by a multitude of members from more than 100 organizations, including a collaborative community of software and hardware innovators. These innovators are able to adapt the ISA to a specific purpose or project. Anyone joining the organization can design, manufacture and/or sell RISC-V chips and software under a "Berkeley Software Distribution" license.

Conclusion

In order to realize its value and possibilities, data needs to be captured, saved, accessed and transformed to reach its full potential. Environments with big data and fast data applications have dwarfed the processing power of general-purpose computing architectures. Future data-centric extreme applications will require processing power for specific applications to support independent expansion of data resources in an open manner.

Having a common open computer architecture centered on data stored in persistent memory while enabling all devices to perform a certain amount of computing, these new types of scalability driven by new types of machine learning computing workloads Key factors in the emergence of the architecture. This new generation of low-energy processing is required for next-generation applications that span all parts of the cloud and edge devices, because specialized computational acceleration processors will be able to focus on the tasks at hand, reducing the time wasted to move data back and forth. Or be able to perform additional calculations that are not related to the data. By harnessing the power, potential, and possibilities of data, humans, society, and our planet can thrive.

Coupling Maintenance: Our company provide coupling maintanence according to client`s request for all different kinds of couplings, especially for Voith Geared Variable Speed Coupling from German and Ebara couplings from Japan. Coupling Maintanence including overhaul for equipment, pipe, quick-wear parts replacement and installation and trial run etc.

If you have any questions, please contact us directly. Welcome to our factory in Shenyang, China.

Coupling Maintenance

Coupling Maintenance,Coupling for Maintenance,Coupling Maintenance Coupling Joint,Flexible Coupling Maintenance

Shenyang German Machine Hydraulic Transmission Machinery Co., Ltd. , http://www.hcouplingc.com